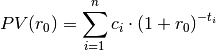

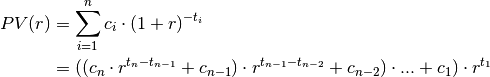

However sometimes it is possible to get a value for the present value (PV) from

the market  , eg if a standardized bond is traded.

, eg if a standardized bond is traded.

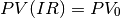

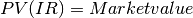

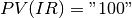

Then there is a high chance that PV based on the calculation rate differs from

the observed market value ( ).

).

A reasonable question is which calculation rate leads to the observed market

value.

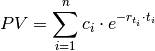

This leads to the following definitions. First a calculation principle:

So it is easy to find the internal rate when all cash flows are of the same sign.

And this way we get a unique Mark To Market rate given a market value.

According to some authors the best way to evaluate the present value formula is

to use a variant of Horner’s Method:

The reason for this is that when the times (t) becomes large the discount factors

becomes close to zero and rounding errors might appear.

becomes close to zero and rounding errors might appear.

In the finance package

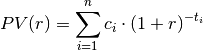

however it has been chosen to use classical formula for evaluation, ie:

The reason for this is the wish to use vector based calculations throughout the

package.

In the package decimalpy a datatype PolyExponents is made to implement the Horner method.

First construct the npv as a function of 1 + r

>>> from decimal import Decimal

>>> from decimalpy import Vector, PolyExponents

>>> cf = Vector(5, 0.1)

>>> cf[-1] += 1

>>> cf

Vector([0.1, 0.1, 0.1, 0.1, 1.1])

>>> times = Vector(range(0,5)) + 0.783

>>> times_and_payments = dict(zip(-times, cf))

>>> npv = PolyExponents(times_and_payments, '(1+r)')

>>> npv

<PolyExponents( 0.1 (1+r)^-0.783

+ 0.1 (1+r)^-1.783

+ 0.1 (1+r)^-2.783

+ 0.1 (1+r)^-3.783

+ 1.1 (1+r)^-4.783

)>

Get the npv at rate 10%, ie 1 + r = 1.1:

>>> OnePlusR = 1.1

>>> npv(OnePlusR)

Decimal('1.020897670129900750434884605')

Now find the internal rate, ie npv = 1 (note that default starting value

is 0, which isn’t a good starting point in this case. A far better

starting point is 1 which is the second parameter in the call of method

inverse):

>>> npv.inverse(1, 1) - 1

Decimal('0.105777770945873634162979715')

So the internal rate is approximately 10.58%

Now let’s add some discount factors, eg reduce with 5% p.a.:

So the discount factors are:

>>> discount = Decimal('1.05') ** - times

And the discounted cashflows are:

>>> disc_npv = npv * discount

>>> disc_npv

<PolyExponents(

0.09625178201551631581068644778 x^-0.783

+ 0.09166836382430125315303471217 x^-1.783

+ 0.08730320364219166966955686873 x^-2.783

+ 0.08314590823065873301862558927 x^-3.783

+ 0.8710523719402343459094109352 x^-4.783)>

And the internal rate is:

>>> disc_npv.inverse(1, 1) - 1

Decimal('0.053121686615117746821885443')

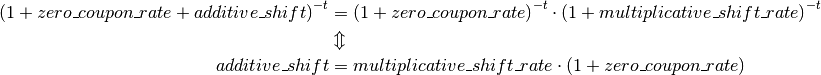

And now it is seen that the internal rate is a multiplicative spread:

>>> disc_npv.inverse(1, 1) * Decimal('1.05') - 1

Decimal('0.105777770945873634162979715')

which is the same rate as before.

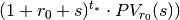

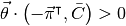

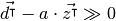

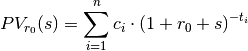

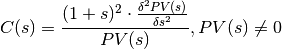

One might want to keep the calculation rate  and look at the changes

or spread (s) in relation to that:

and look at the changes

or spread (s) in relation to that:  . Hence

. Hence  is the

generel or average rate across cashflows whereas the spread (s) is the

individual part covering the difference from the average/generel rate in order

to become mark to market.

is the

generel or average rate across cashflows whereas the spread (s) is the

individual part covering the difference from the average/generel rate in order

to become mark to market.

This way the present value calculation becomes:

And that is the notation we will use below.

Note

This type of spread is added to rate using bond market discounting.

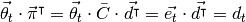

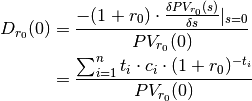

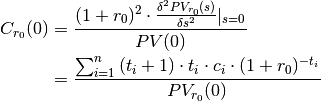

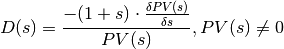

Definition, Macauley Duration:

The Macauley duration or rather the bond duration as defined below is a

weighted average of the payment times using the present values of cashflows

as weights (this assumes that the cashflows are of same sign)

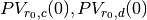

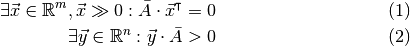

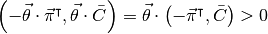

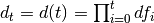

Theorem, Reddingtons immunity

When a rate shock (a parallel shift) is added to the calculation rate then

the Macauley Duration is the time before the PV for a cashflow

is risk free, ie the rate shock is absorbed.

is risk free, ie the rate shock is absorbed.

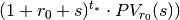

- Proof:

We look at the future value at time  of the present value

of the present value

and examine when the future value

and examine when the future value

is risk free regarding rate

shocks s, ie:

is risk free regarding rate

shocks s, ie:

So the future value is risk free when

Q.E.D.

This result is not that important. It shows that the duration is the time

before a (parallel) rate shift/shock is absorbed.

It does not show what happens, when PV is 0 which is a problem eg with interest

rate swaps.

And it is irrelevant since it would be better to measure eg the time to

illiquidity or the value at risk.

The result is only presented for historical reasons.

A bond is itself a portfolio of zero bonds. Since duration and maturity are equal

for zero bonds it follows that duration is subadditive, ie the duration of the

portfolio is at most the sum of the durations for the parts of the portfolio.

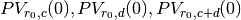

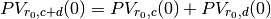

Theorem, Duration for a portfolio

Now the Macauley duration for the sum of two cashflows  and

and

is the present value weighted sum of the durations for

each cashflow, ie:

is the present value weighted sum of the durations for

each cashflow, ie:

An necessary assumption is that all present values

are nonzero.

are nonzero.

- Proof:

Now assume two cashflows  and

and  . The

present value of the sum of cashflows is the sum of the present values of

each cashflow, ie:

. The

present value of the sum of cashflows is the sum of the present values of

each cashflow, ie:

Q.E.D.

An similar argument can be made for the modified duration.

This only valid when the PVs  and their sum

and their sum

are nonzero.

are nonzero.

Since there the only elements in the portfolio formula are PVs and durations of

each cashflows, the formula can be generalized to when PVs and durations comes

from different yieldcurves.

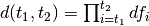

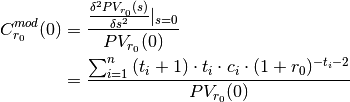

To improve the use of Durations the concept of convexity is introduced.

Definition, Macauley Convexity:

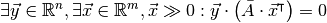

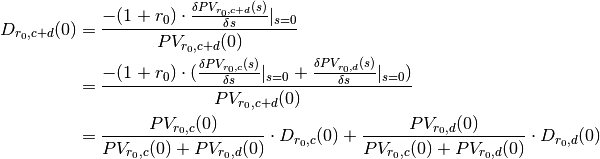

The rationale for the convexity is following Taylor approximation around s = 0:

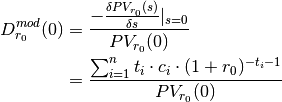

Modified Duration is the elasticy for the present value with regards to to the

rate. As can be seen the modified duration is almost the same as the Macauley

duration.

Definition, Modified Duration:

And in the modified case it is also possible to define a second order effect, ie

a modified convexity.

Definition, Modified Convexity:

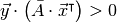

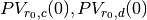

To see how modified duration and modified convexity can used to approximate

the changes in present value due to rate changes s one has to look at the

Taylor approximation of ln(PV):

When there is significant curvature/convexity the last approxomation is better.

The last approximation does also have a Macauley version:

When the present value is zero as might be the case with eg. interest rate swaps

other measure are needed.

Below are 2 such measures that tries to handle the problem with zero present

values:

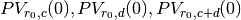

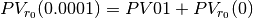

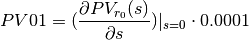

Definition, PV01:

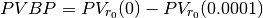

Price Value of a 01 (a basis point = 0.0001) is defined as:

Definition, PVBP:

Price Value of a Basis Point (= 0.0001) is defined as:

Using the tangent formula  it is

easy to see that the 2 are almost identical (except for the sign).

it is

easy to see that the 2 are almost identical (except for the sign).

But here there are no literature suggesting how to handle portfolios. And here

is a problem since a basis point might have different probability for different

cashflows.

Note

In the Macauley setup presented in most textbooks there is one parameter,

the rate, to get a Mark to Market value. This way different cashflows aren’t

comparable since they have different rate.

In this presentation the individual rate is split into a common calculation

rate  and a individual spread s.

and a individual spread s.

This way the Mark to market can be accessed by the spread and portfolio risk

can accessed by using risk calculations based on the common rate  .

.

This is a forerunner for the use of yield curves in the risk calculations.

One way of seeing the common calculation rate  in the next setup

is as the constant yield curve.

in the next setup

is as the constant yield curve.

On the other hand it is obvious that the setup used here contains the

classical macauley setup when the common calculation rate is zero, ie

Also it is obvious that the greater spread the less of the market value is

explained by the the common calculation rate  and hence the

greater risk must be associated to such a cashflow.

and hence the

greater risk must be associated to such a cashflow.

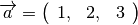

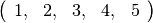

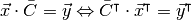

at times

at times

expressed as dates.

These payments can be considered vectors.

Ie. dateflows that be added and multiplied like normal vectors after

filling with zeroes at missing times so that the 2 vectors have the same

set of times. So in the following there will be no difference between

vectors with ordered keys and dateflows.

expressed as dates.

These payments can be considered vectors.

Ie. dateflows that be added and multiplied like normal vectors after

filling with zeroes at missing times so that the 2 vectors have the same

set of times. So in the following there will be no difference between

vectors with ordered keys and dateflows. at times

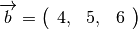

at times  and a time vector

and a time vector

at times

at times  .

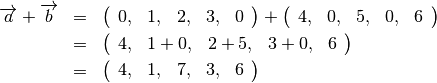

. and

and

they must first have the same set of times, ie.

they must first have the same set of times, ie.

.

Then eg.

.

Then eg.

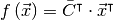

is a

row vector and

is a

row vector and

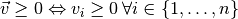

ie all vector values are nonnegative

ie all vector values are nonnegative

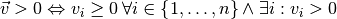

ie all vector values are nonzero and at least one

vector value is positive

ie all vector values are nonzero and at least one

vector value is positive

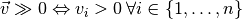

ie all vector values are positive

ie all vector values are positive and

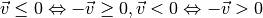

and

.

. where

where  is a

columnvector of nonzero prices.

is a

columnvector of nonzero prices. is a

is a  matrix where each row is a

dateflow and all dateflows has been filled with the necessary zeroes so

that all dateflows has a value for all dateflow times.

matrix where each row is a

dateflow and all dateflows has been filled with the necessary zeroes so

that all dateflows has a value for all dateflow times. , has

dateflow

, has

dateflow  and price

and price

or

or

.

. be a

be a  matrix.

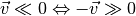

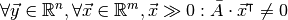

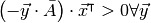

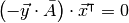

Then exactly one of the following statements are true:

matrix.

Then exactly one of the following statements are true:

and

and  .

. and

and

.

. in the orthogonal subspace

of

in the orthogonal subspace

of  will give

will give

.

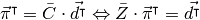

. is an arbitrage if either the price of

the portfolio is zero, ie

is an arbitrage if either the price of

the portfolio is zero, ie  and the dateflow is positive at at least one future point, ie

and the dateflow is positive at at least one future point, ie

or if the price is negative (giving

money to the owner right away) ie.

or if the price is negative (giving

money to the owner right away) ie.

and maybe also gives the

owner a future dateflow, ie.

and maybe also gives the

owner a future dateflow, ie.  .

. .

. such that

such that

.

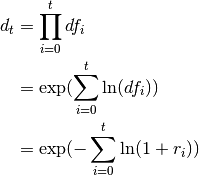

Here

.

Here  is refered to as the discount vector.

is refered to as the discount vector. .

According to

.

According to  .

.

there exists a vector

there exists a vector  such that

such that

.

. , ie the number of dateflow in the

financial market must exceed the number of time points to discount.

, ie the number of dateflow in the

financial market must exceed the number of time points to discount. is surjective.

is surjective. that has the

unity vector

that has the

unity vector  , ie

, ie  .

They exist due to completeness.

.

They exist due to completeness. is a

is a  matrix having

matrix having  as row vectors and rank T,

as row vectors and rank T,  .

. and

and

and

hence that there can be only 1 vector of discount factors.

and

hence that there can be only 1 vector of discount factors. such that

such that

.

Choose a number a such

.

Choose a number a such  .

This is a second vector of discount factors which is a contradiction.

.

This is a second vector of discount factors which is a contradiction. where the only nonzero value, 1, is

at the place reserved for time t.

where the only nonzero value, 1, is

at the place reserved for time t. , the discount

factor reserved for time t.

, the discount

factor reserved for time t. that has the dateflow

that has the dateflow

.

It exist due to completeness.

.

It exist due to completeness.

where

where  is the future discount factor for the minimum period

number i.

When observed in the market

is the future discount factor for the minimum period

number i.

When observed in the market  is the discrete time compounded

spot price for a zero bond.

is the discrete time compounded

spot price for a zero bond. to time

to time  can be expressed as

can be expressed as

. Note that

. Note that

.

When observed in the market

.

When observed in the market  is the discrete time

compounded forward price for a zero bond.

is the discrete time

compounded forward price for a zero bond.

is the price for borrowing one day at day i from now.

Since prices usually are positive, it means that usually

is the price for borrowing one day at day i from now.

Since prices usually are positive, it means that usually  .

.

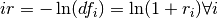

then

then

and

and  .

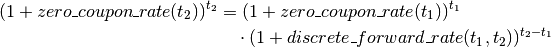

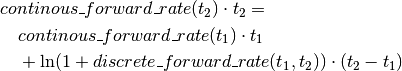

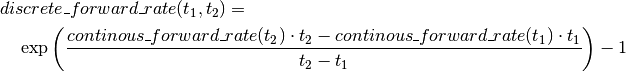

The forward rate is defined as (using no arbitrage):

.

The forward rate is defined as (using no arbitrage):

.

.

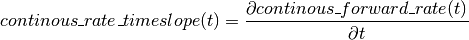

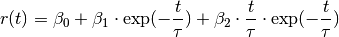

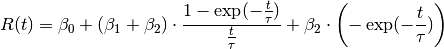

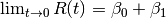

is interpreted as the level of the curve,

the magnitude of the slope is

is interpreted as the level of the curve,

the magnitude of the slope is  and finally

and finally  is

the magnitude of the curvature.

is

the magnitude of the curvature.  is representing a time rescale.

is representing a time rescale.

‘s the code in the

package finance and the rest of this text is based on on the first formula

‘s the code in the

package finance and the rest of this text is based on on the first formula

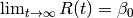

so

so  so

so

is the rate at time 0. Hence

is the rate at time 0. Hence

then the difference between the factors of

then the difference between the factors of  the time has to be greater than 20 before the same

happens.

the time has to be greater than 20 before the same

happens.

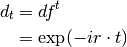

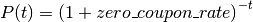

for all time periods of length 1, it is a flat yield curve and the discount

factors (see

for all time periods of length 1, it is a flat yield curve and the discount

factors (see  .

. at times (dates)

at times (dates)  .

In short this can be written as

.

In short this can be written as  or

or  .

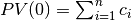

. all has the same value:

all has the same value:

, eg if a standardized bond is traded.

, eg if a standardized bond is traded. ) equal to a present value (

) equal to a present value (

is above (below) the sum

of all payments

is above (below) the sum

of all payments  , ie the rate is 0

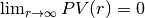

, ie the rate is 0 , ie the PV function has a

horizontal asymptote

, ie the PV function has a

horizontal asymptote  will always be positive for all

will always be positive for all

. Note that we are only looking at future times so t is

positive.

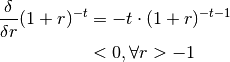

Hence the first order derivative of the discount factor is always negative

since:

. Note that we are only looking at future times so t is

positive.

Hence the first order derivative of the discount factor is always negative

since:

and the PV function is monotone

the last statement is true.

and the PV function is monotone

the last statement is true.

. Hence

. Hence

of the present value

of the present value

and examine when the future value

and examine when the future value

is risk free regarding rate

shocks s, ie:

is risk free regarding rate

shocks s, ie:![(\frac{\delta }{\delta s}\left[(1 + r_0 + s)^{t_*} \cdot PV_{r_0}(s)\right])|_{s=0} &= 0 \\

\Updownarrow \\

(\frac{\delta PV_{r_0}(s)}{\delta s} \cdot (1 + r_0 + s)^{t_*} + t_* \cdot (1 + r_0 + s)^{t_* - 1} \cdot PV_{r_0}(s))|_{s=0} &= 0 \\

\Updownarrow \\

(1 + r_0)^{t_* - 1} \cdot (\frac{\delta PV_{r_0}(s)}{\delta s}|_{s=0} \cdot (1 + r_0) + t_* \cdot PV(0)) &= 0 \\

\Downarrow (PV_{r_0}(0) \neq 0)\\

(1 + r_0)^{t_* - 1} \cdot PV_{r_0}(0) \cdot (-D_{r_0}(0) + t_*) &= 0](../_images/math/1a2eb9dc4695b79f8458ef491c8130b3f732922d.png)

is the present value weighted sum of the durations for

each cashflow, ie:

is the present value weighted sum of the durations for

each cashflow, ie:

are nonzero.

are nonzero.

and their sum

and their sum

are nonzero.

are nonzero.

![PV_{r_0}(s) &\approx PV_{r_0}(0)\left[1 - \frac{D_{r_0}(0)}{1+r_0} \cdot s

+ \frac{1}{2} \cdot \frac{C_{r_0}(0)}{(1+r_0)^2} \cdot s^2

\right] \\

& \Downarrow PV_{r_0}(0) \neq 0 \\

\frac{PV_{r_0}(s)}{PV_{r_0}(0)} - 1 &\approx \frac{-D_{r_0}(0)}{1+r_0} \cdot s

+ \frac{1}{2} \cdot \frac{C_{r_0}(0)}{(1+r_0)^2} \cdot s^2](../_images/math/085f6398e9d6772e223a33c5020a99e4fe1ae70a.png)

![\ln(PV_{r_0}(s)) &\approx \ln(PV_{r_0}(0)) - D_{r_0}^{mod}(0) \cdot s

+ \frac{C_{r_0}^{mod}(0) - D_{r_0}^{mod}(0)^2}{2} \cdot s^2 \\

& \Downarrow PV_{r_0}(0) \neq 0 \\

PV_{r_0}(s) &\approx PV_{r_0}(0) \cdot \exp \left[ - D_{r_0}^{mod}(0) \cdot s

+ \frac{C_{r_0}^{mod}(0) - D_{r_0}^{mod}(0)^2}{2} \cdot s^2 \right]](../_images/math/14899abd567372295dd3620a74eb1357f81dc390.png)

![PV_{r_0}(s) &\approx PV_{r_0}(0) \cdot \exp \left[ - \frac{D_{r_0}(0)}{1+r_0} \cdot s

+ \frac{C_{r_0}(0) - D_{r_0}(0)^2}{2 \cdot (1+r_0)^2} \cdot s^2 \right]](../_images/math/b0c26ad905874b871496ed90354c4a751f427a1c.png)

it is

easy to see that the 2 are almost identical (except for the sign).

it is

easy to see that the 2 are almost identical (except for the sign).

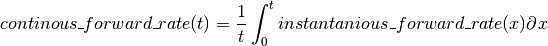

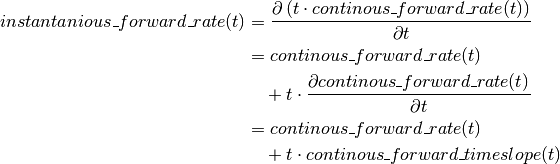

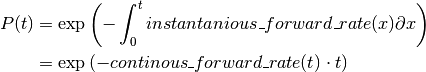

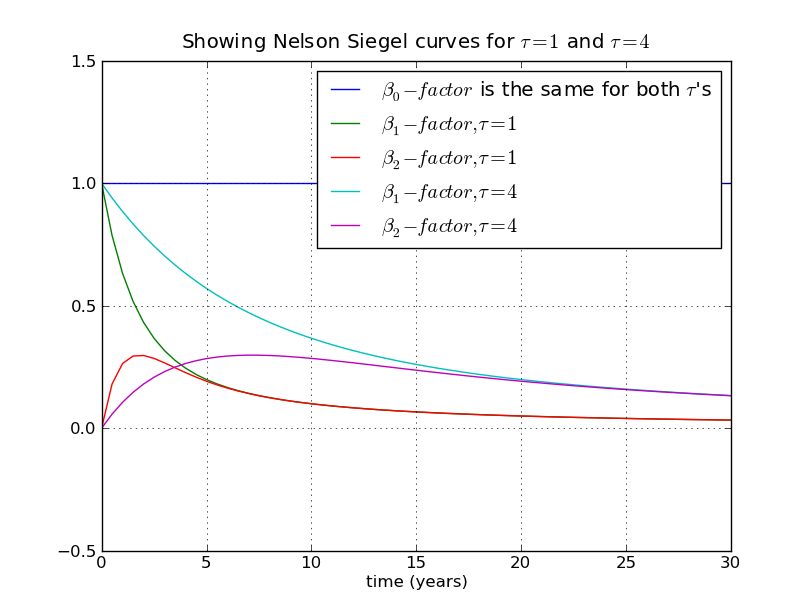

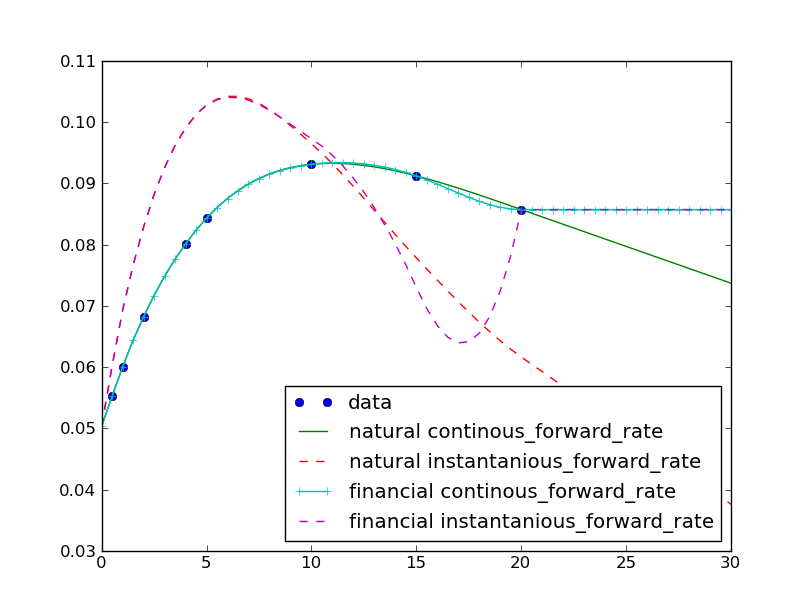

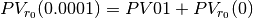

are the continous forward rates at times

are the continous forward rates at times

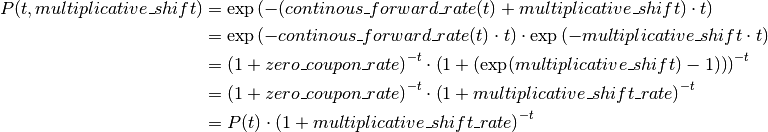

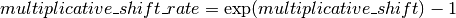

![PV(s) &= \sum^{n}_{i=1} c_i \cdot e^{-(r_{t_i} + s) \cdot t_i} \\

&= \sum^{n}_{i=1} [c_i \cdot e^{-r_{t_i} \cdot t_i}] \cdot e^{-s \cdot t_i}](../_images/math/61aa8294a6da279919bec7e86e99d6777bdbb91d.png)

![D^{mod}(0) &= \frac{-\frac{\delta PV(s)}{\delta s}|_{s=0}}{PV(0)} \\

&= \frac{\sum^{n}_{i=1}{t_i \cdot [c_i \cdot e^{-r_{t_i} \cdot t_i}]}}{PV(0)}](../_images/math/fabee27262343c9ff0dfc1905346dbf59a8d50ec.png)

is both the Macauley and the modified durations

(see above).

If no yieldcurve is used to discount then

is both the Macauley and the modified durations

(see above).

If no yieldcurve is used to discount then  is the Macauley

duration where

is the Macauley

duration where

is both the Macauley and the modified convexities.

And if no yieldcurve is used to discount then

is both the Macauley and the modified convexities.

And if no yieldcurve is used to discount then  is the Macauley

convexity where

is the Macauley

convexity where